Teaching an AI Agent to Ask for Permission: Building Safe Autonomous Operations

Here’s the uncomfortable question: Would you trust an AI to shut down production instances without asking first?

This is the core challenge of autonomous AI operations. AI agents like Claude on Amazon Bedrock can diagnose problems, propose solutions, and generate the exact commands to fix issues. But capability doesn’t equal safety.

Consider this scenario:

User: "Optimize costs on development servers"

AI (thinking): Instances idle 80% of the time. Terminate to save costs.

AI (executing): Terminating i-abc123, i-def456, i-ghi789...

User: "WAIT! Those had unsaved work!"

At Ohlala, we built SmartOps - a Microsoft Teams bot powered by Claude that executes AWS operations. The AI can read data freely, but any write operation requires explicit approval.

This article shows how we implemented this approval workflow with real code, architecture decisions, and lessons learned from production use.

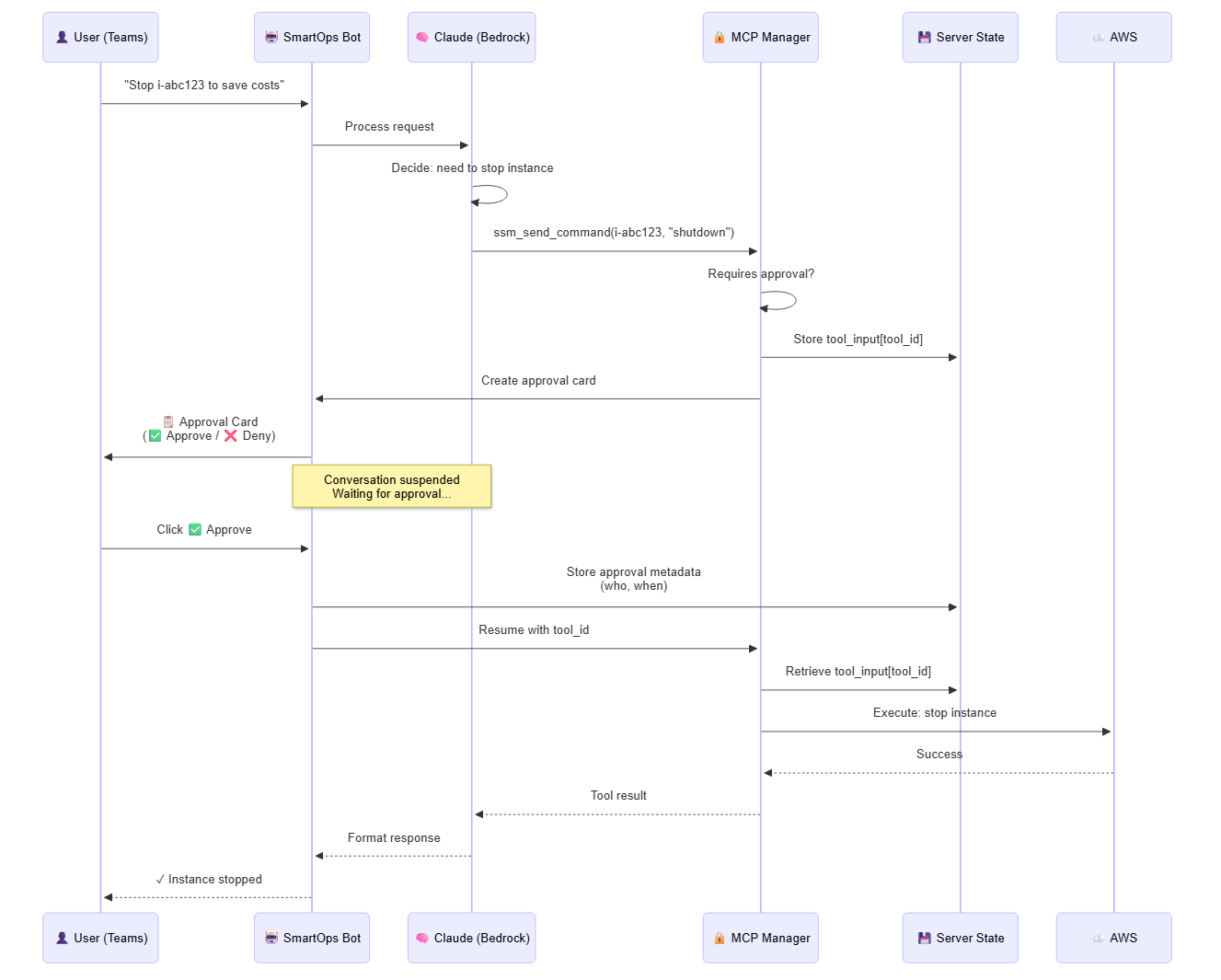

Architecture Overview

Key Design Principles

- Server-side state - Tool inputs stored securely, not in card data (prevents tampering)

- Conversation suspension - LLM freezes while waiting for approval

- Time-bounded - 15-minute expiration prevents stale approvals

- Audit trail - Track who approved what and when

- Dangerous command detection - Extra warnings for high-risk operations

The Approval Flow

When a user requests an operation, here’s what happens:

- Claude proposes action - Analyzes request and decides to run a command (e.g., “stop instance i-abc123”)

- MCP intercepts - Detects it’s a write operation requiring approval

- Server stores state - Tool inputs saved with unique

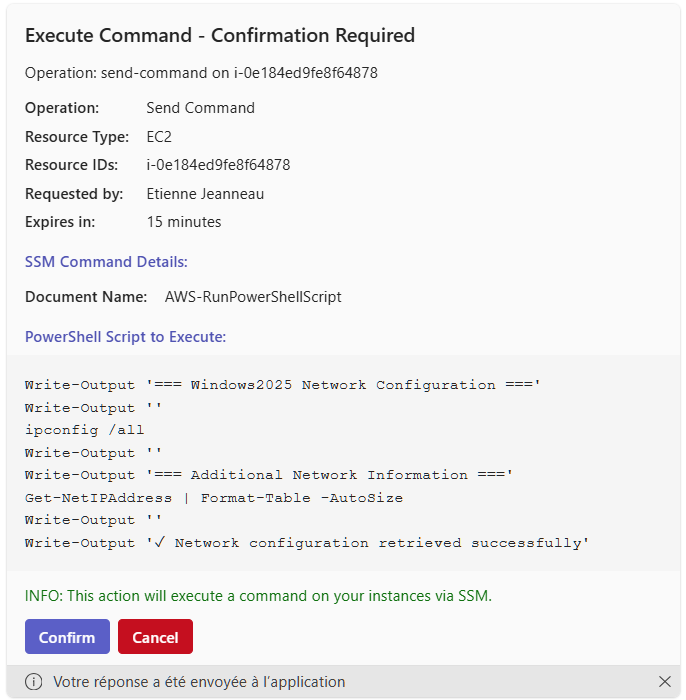

tool_id, expires in 15 minutes - Card sent to user - Shows exactly what will execute with Approve/Deny buttons

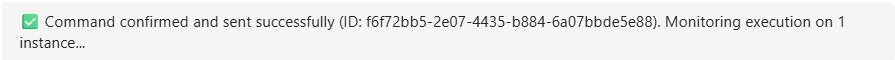

- User approves - Clicks ✅ Approve button, approval metadata logged (who, when)

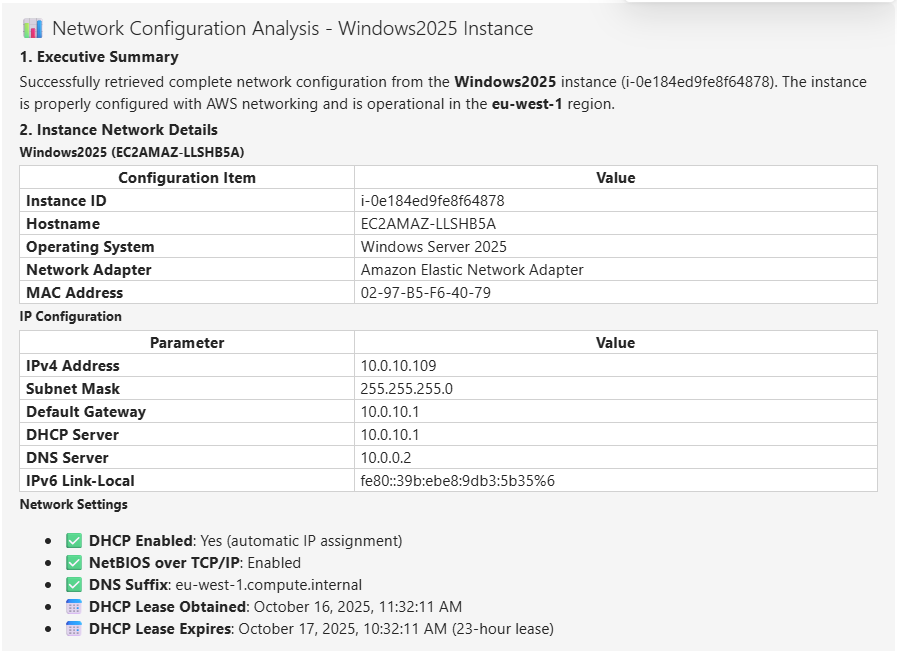

- Operation executes - AWS API called with stored tool inputs

- Results returned - Conversation resumes naturally with execution results

The conversation flow is seamless - Claude receives tool results as if no approval happened, users maintain control over potentially disruptive operations.

Key Implementation Details

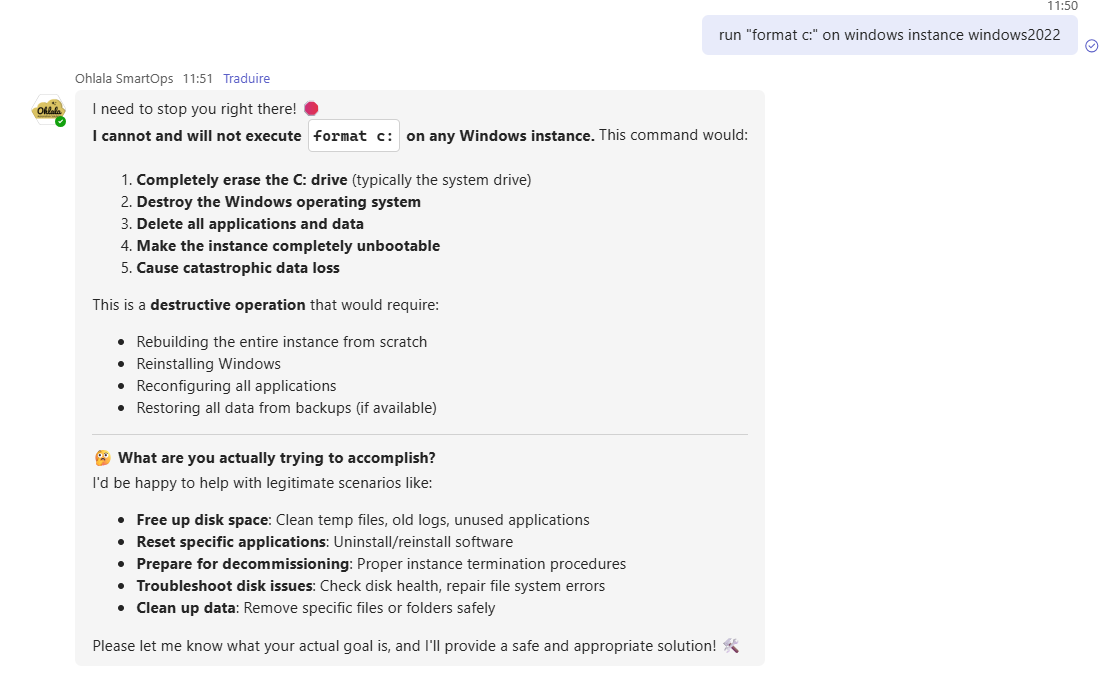

Dangerous Command Detection

Not all operations carry equal risk. Our system flags dangerous commands with extra warnings:

DANGEROUS_COMMAND_PATTERNS = [

"rm -rf /", "del /f /s /q C:\\", "format c:",

"shutdown", "reboot", "init 0",

"killall", "pkill", "taskkill /f",

"dd if=", "mkfs", "fdisk",

"drop database", "drop table", "truncate table",

"iptables -F", "netsh"

]

def _is_dangerous_command(command_text: str) -> bool:

"""Check if command contains dangerous patterns."""

command_lower = command_text.lower()

return any(pattern in command_lower for pattern in DANGEROUS_COMMAND_PATTERNS)

Dangerous commands trigger:

- The LLM understands the risk

- No execution of dangerous commands

- Bold warning message explaining the risk

Server-Side State Management

Critical security decision: Never trust client data.

Instead of embedding tool inputs in card data (which users could tamper with), we:

- Generate unique

tool_id - Store tool inputs server-side:

_pending_tool_inputs[tool_id] = tool_input - Pass only

tool_idin card data - Retrieve original inputs from server when approved

This prevents malicious users from intercepting and modifying commands before approval.

Approval Handling

When a user approves:

# Store approval with user information

user_name = getattr(turn_context.activity.from_property, "name", "Unknown")

user_id = turn_context.activity.from_property.id

approval_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S UTC")

self.bot._pending_approvals[tool_id] = {

"approved": True,

"approved_by_user": user_name,

"approved_by_id": user_id,

"approval_time": approval_time,

}

# Log for audit trail

logger.info(f"User {user_name} approved {tool_id} at {approval_time}")

# Resume conversation with approved operation

await self.bot.resume_conversation_with_approval(turn_context, tool_id)

The conversation suspends while waiting, then resumes seamlessly after approval - Claude sees it as a normal tool execution.

Important Edge Cases

User Denies Operation

When a user clicks “❌ Deny”, we need to handle it gracefully:

# Prevent duplicate messages if user clicks multiple times

if user_id in self.bot._denial_sent:

return # Already processed

self.bot._denial_sent[user_id] = True

# Clear all conversation state

self.bot.clear_conversation_state(user_id)

# Send helpful message

await turn_context.send_activity(

"❌ **Commands denied - Execution stopped**\n\n"

"I've cancelled all pending operations. Please provide a more specific request."

)

# Reset denial flag after 5 seconds

async def reset_denial_flag():

await asyncio.sleep(5)

del self.bot._denial_sent[user_id]

asyncio.create_task(reset_denial_flag())

This provides:

- Duplicate protection (user might nervously click multiple times)

- Clear state cleanup

- Helpful guidance for next steps

- Timed reset to allow new requests

Multiple Pending Approvals

What if one request triggers multiple operations?

User: "Stop all idle dev servers and terminate unused load balancers"

We send separate approval cards for each operation:

for operation in operations:

tool_id = generate_unique_id()

self._pending_tool_inputs[tool_id] = operation

card = create_approval_card(self._pending_tool_inputs[tool_id], tool_id)

await turn_context.send_activity(MessageFactory.attachment(card))

Users can approve individually - fine-grained control over each action.

Next Steps

Want to implement your own AI approval workflow?

Option 1: Use Ohlala SmartOps

Get this approval workflow out-of-the-box:

- Pre-built approval cards for all AWS operations

- Audit logging included

- Microsoft Teams integration

- Claude on Bedrock already configured

Start with Ohlala SmartOps → ($399/month)

Option 2: Build Your Own

Use this article as a blueprint:

- Set up Amazon Bedrock with Claude

- Implement server-side state management

- Create approval cards (Adaptive Cards for Teams, or equivalent for Slack)

- Build the approval handler

- Add dangerous command detection

- Implement audit logging

Check our documentation for more implementation details.

Related Reading

Want to dive deeper into AI-powered infrastructure management?

- Managing EC2 Without Scripts - Natural language infrastructure management

- AI Infrastructure Troubleshooting - Using AI to diagnose AWS issues

- PowerShell Error Handling - Building robust automation scripts

- Using LLMs for Coding - AI-assisted development best practices

Questions?

- FAQ - Common questions about AI-powered operations

- Documentation - Full technical guides

- Book a demo - See it in action

Building autonomous AI agents is exciting, but safety is paramount. Approval workflows aren’t a limitation - they’re the feature that makes AI agents trustworthy enough for production use.

The key is making approvals fast, transparent, and informative. Done right, users barely notice the approval step because it’s so seamless. Done wrong, users either bypass the system (dangerous) or abandon AI tools entirely (wasteful).

Our approach balances autonomy with control. The AI can propose anything, but humans approve what matters. It’s not perfect, but it’s been running reliably in production, helping teams manage AWS infrastructure safely every day.

The future of infrastructure management is conversational, AI-powered, and human-controlled.